The Comprehensive Guide to robots.txt File for SEO!

How to correctly control the inclusion of your website in the search index using robots.txt and how to positively influence the ranking of the website?

At the beginning of July 2019, Google announced that the tag is no longer supported in the robots.txt and is now also requesting an email via the Google Search Console to remove this entry.

But even if you haven’t received an email, you will find tips here on how you can improve your google ranking by cleverly controlling the crawling. For those who have received the email from Google but do not even know how to proceed, there are also a few specific tips on how to proceed.

1. What is the robots.txt?

The robots.txt is a text file that is in the root directory of the website on the server. It contains instructions on which directories or files the search engine crawlers can and cannot access. It is used to control the crawling behaviour.

You do not necessarily need such a file if the crawlers are allowed to access all pages, images or PDFs or if you have already excluded individual pages with the noindex tag. You can find out how this works in WordPress below. The crawlers basically assume that they are allowed to see everything on a website. But why it often doesn’t make sense to offer everything to crawlers, see the next point.

2. What does the noindex tag do and why is it important?

Basically, this tag controls the blocking of the inclusion of a single website in the Google search index. If the tag is present in the HTML code of the page, the Googlebot recognizes during the next crawl that this page should be excluded from the search results. Other search engines also support this day.

It is generally important to carefully consider whether to restrict the visibility of certain pages that are less valuable. Because if there are too many pages that have small added value for users, this can have a negative effect on the ranking.

Examples of less valuable content are duplicated content through the search function, through categories and keywords or through filter options. All of these possibilities keep generating new URLs that Google would have to crawl. Such URLs, which can go into the thousands with filters, are ultimately always the same in terms of content. Every website has a certain “crawl budget” and if Google sees a lot of similar pages, Google may not be indexing the correct pages and ranking in Google Search will deteriorate.

3. Why does Google no longer support <noindex> tag in robots.txt?

Including noindex in the robots.txt has never been the right approach, especially when the page also contains the tag in the HTML code. Because the crawler does not see the instruction <noindex> there if it is stopped by the robots.txt not to bring the page into the index. So, it can still appear in the search results. This happens when, for example, there are links to the pages set to <noindex>.

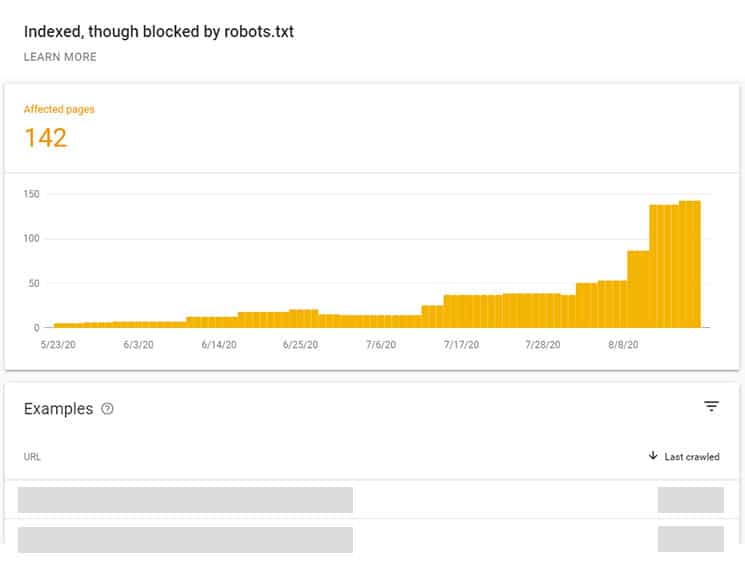

In the Search Console you will find hints if there are unintentional errors here, e.g. indexed although blocked by robots.txt.

4. Where can I see what is entered in the robots.txt?

In the Google Search Console, you will find the robots.txt test tool, which currently still leads to the old Search Console. Next, Choose a verified property. Now you are looking at your robots.txt file and you can make some changes inside the tester. Once your edits are being completed, and your robots.txt looks the way you want it, click Submit.

5. Where can I set a page or the entire website to noindex in WordPress?

If a WordPress website is still under construction and should not yet be visible in the search engines, this can be regulated in the WordPress settings. However, up until now the entry “follow” & “nofollow” appeared in the robots.txt. You can find this under “Settings”:

However, this is not a safe method, because pages blocked in this way can still appear in search results. With WordPress version 5.5. however, the meta robots tag “noindex, nofollow” will be set, which is more secure.

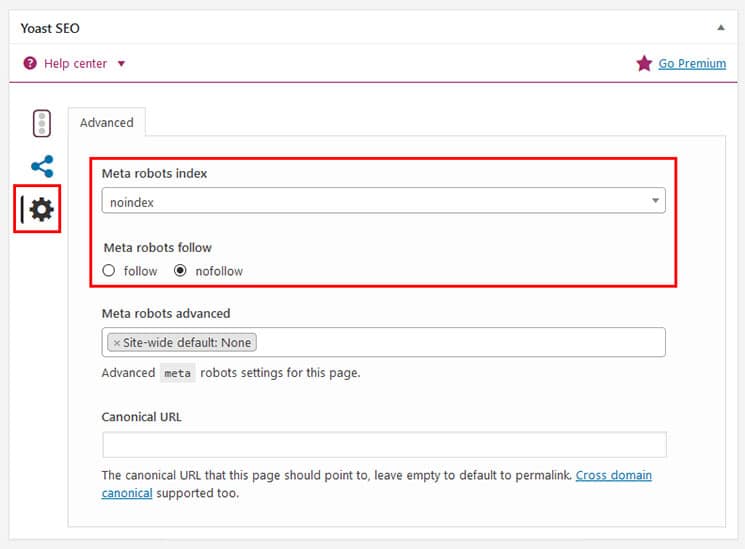

If you only want to exclude a single page from indexing in WordPress, the easiest way is to use an SEO plugin such as Yoast or All-in-One SEO. In Yoast you can exclude the page in the settings, which are located in the SEO block under the page or the post:

You can also exclude entire directories with the plugin, especially if you have a blog with a lot of categories and keywords that keep generating new URLs. Feeds, search result pages and the attachment URLs etc. can also be excluded.

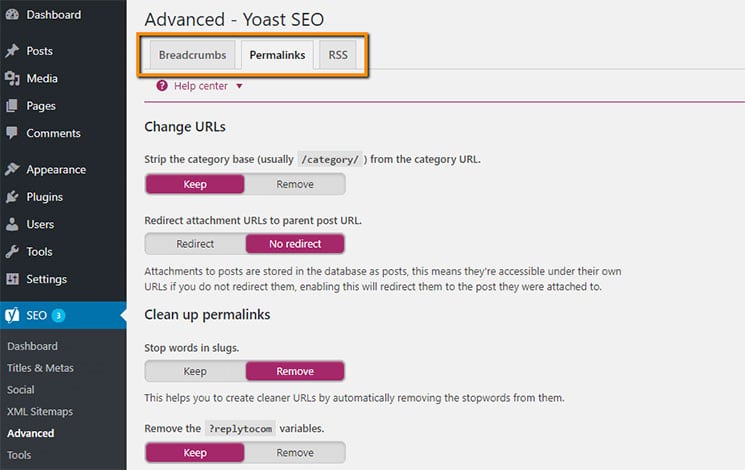

It is also possible to edit the robots.txt with the plugin, which is pretty cool!

In the Google Search Console, you can see under cover which pages are valid and which are excluded. Google give a clear signal as to what is important and what is not.

Of course, it is worth checking every now and then whether everything is correct on the excluded pages.

6. How do I know which pages on my website are being indexed?

You can use the command site: https://example.com in the Google search to list all files that Google finds.

The classification of the pages is importance. Don’t confuse this with the ranking, because the position in the search results always depends on the search term that the user has chosen.

7. My page is still found, what should I do?

If the page continues to appear in search results, the crawler has simply not revisited the page since the noindex tag was added. Here you have to be patient in any case. According to Google, crawling and indexing are complicated that can sometimes take a long time with certain URLs. This is especially the case if your website is on the small side and little new content appears.

In the Search Console you can have the URL checked. By doing this, you are requesting that the page be crawled again. After all, the crawlers do not come by your website all the time, especially not when nothing is happening, i.e. there is no new content.

At the same time, of course, you check whether the noindex tag has really been removed from the robots.txt, as described above.

8. What should I not do if the page is still in the index?

If a page has already been deleted because it no longer fits at all, it is of course annoying if it still appears in the index. What you should never do, however, is to exclude a page that is already on noindex with the command “Disallow” in the robots.txt. The same applies here as with the noindex, the crawler sees that it is not allowed to crawl the page, but does not recognize the noindex on the page itself. So, the page can potentially still stay in the search index.

By the way, the Disallow command doesn’t guarantee that a page will appear in search results. If the page is found relevant because it has inbound links, it can still be indexed.

In addition – and this is very important – CSS and JavaScript files must not be excluded, since otherwise Google cannot render the pages correctly. But then you should also have received an email (if you have the Search Console) that the Googlebot cannot access these files.

Of course, you are not allowed to use the robots.txt for private content. You can protect this with a password via server-side authentication.

Well, find here a list of all the rules for the robots.txt.

9. The robots.txt and the XML sitemap

It is recommended to link to the XML sitemap in the robotx.txt. You can submit your sitemap in the Search Console. Here, too, make sure that not all pages are submitted using a sitemap, especially of course not the noindex pages or pages without added value as described above.

10. Conclusion

The control of crawling by the Googlebot with the help of robots.txt is an important thing if pages or directories are to be excluded. You have to carefully consider or discuss with your web developer or programmer which approach is the right one. Ultimately, dealing with it correctly goes a long way towards making your website visible.

Use Google Search Console to track your website’s search results. Do you need any help regarding your website’s SEO? We are happy to assist you. If you are looking for a website SEO, website analytics, digital marketing service and more, please explore our SEO Services! We are also providing regular website maintenance and support services. For more information, please explore our website maintenance services!